He’s gray-haired, bearded, wearing no shoes and standing at Rae Spencer’s doorstep.

“Babe,” the content creator wrote in a text to her husband. “Do you know this man? He says he knows you?”

Spencer’s husband, who responded immediately with “no,” appeared to express shock when she then sent more images of the man pictured inside their home, sitting on their couch and taking a nap on their bed. She said he FaceTimed her, “shaking” in fear.

But the man wasn’t real. Spencer, based in St. Augustine, Florida, had created the images using an artificial intelligence-based generator. She sent them to her husband and posted their exchange to TikTok as part of a viral trend that some online refer to as the “AI homeless man prank.”

Over 5 million people have liked Spencer’s video on TikTok, where the hashtag #homelessmanprank populated more than 1,200 videos, most of them related to the recent trend. Some have also used the hashtag #homelessman to post their videos, all of which center on the idea of tricking people into believing that there is a stranger inside their home. Several people have also posted tutorials about how to make the images. The trend has also spread to other social media platforms, including Snapchat and Instagram.

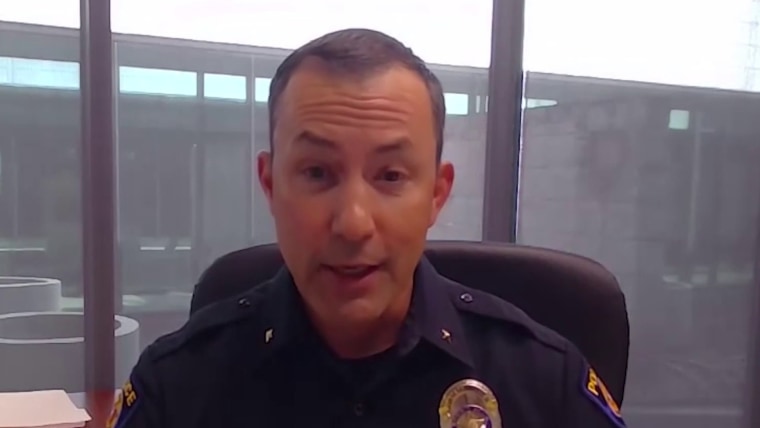

As the prank gains traction online, local authorities have started issuing warnings to participants — who they say primarily are teens — about the dangers of misusing AI to spread false information.

“Besides being in bad taste, there are many reasons why this prank is, to put it bluntly, stupid and potentially dangerous,” police officials in Salem, Massachusetts, wrote on their website this month. “This prank dehumanizes the homeless, causes the distressed recipient to panic and wastes police resources. Police officers who are called upon to respond do not know this is a prank and treat the call as an actual burglary in progress thus creating a potentially dangerous situation.”

Even overseas, some local officials have reported false home invasions tied to the trend. In the United Kingdom, Dorset Police issued a warning after the force deployed resources when it received a “call from an extremely concerned parent” last week, only to learn it was a prank, according to the BBC. An Garda Síochána, Ireland’s national police department, also wrote a message on its Facebook and X pages, sharing two recent images authorities received that were made using generative AI tools.

The prank is the latest example of AI’s ability to deceive through fake imagery.

The proliferation of photorealistic AI image and video generators in recent years has given rise to an internet full of AI-made “slop”: media of fake people and scenarios that — despite exhibiting telltale signs of AI — fool many people online, especially older internet users. As the technologies grow more sophisticated, many find it even harder to distinguish between what’s real and what’s fake. Last year, Katy Perry shared that her own mother was tricked by an AI-generated image of her attending the Met Gala.

Even if most such cases don’t involve nefarious intent, the pranks underscore how easily AI can potentially manipulate real people. With the recent release of Sora 2, an OpenAI employee touted the video generator’s ability to create realistic security video of CEO Sam Altman stealing from Target — a clip that drew concern from some who worry about how AI might be used to carry out mass manipulation campaigns.

AI image and video generators typically put watermarks on their outputs to indicate the use of AI. But users can easily crop them out.

It’s unclear which specific AI models were used in many of the video pranks.

When NBC News asked OpenAI’s ChatGPT to “generate an image of a homeless man in my home,” the bot replied, “I can’t create or edit an image like that — it would involve depicting a real or implied person in a situation of homelessness, which could be considered exploitative or disrespectful.”

Asked the same question, Gemini, Google’s AI assistant, replied: “Absolutely. Here is the image you requested.”

OpenAI and Google didn’t immediately respond to requests for comment.

Representatives for Snap and Meta (which owns Instagram) didn’t provide comments.

Reached for comment, TikTok said it added labels to videos that NBC News had flagged related to the trend to clarify that they are AI-generated content.